Artificial intelligence is revolutionizing the world and transforming industries. Using AI, companies are running more efficiently, smarter, and safer. We’re only just beginning to realize the tremendous potential of AI, though. There is a large gap between what is and what could be.

McKinsey & Company famously predicted AI could create $13 trillion of value globally by 2030. On the other hand, a study by BCG and MIT found only 10% of companies are reporting strong ROI on their AI investments. The good news is there is still a lot of opportunity to deliver value with AI solutions. However, there are significant challenges and risks involved in creating that value with AI.

These are four lessons we have learned about commercializing AI.

1. For data, quality is more important than quantity

Anything around AI or machine learning (ML) needs data. Data is what AI runs on. In the past, quantity was very important (remember the era of “Big Data” in the 2010s?). However, recent advances in data-centric AI have moved the trend towards higher quality data rather than higher volumes of data.

Beyond the amount and quality of data required, collecting this data can be a major cost factor, especially for very early stage companies that have limited capital to expend. In some cases, data collection issues can be mitigated with publicly available data, but there are just as many cases where there’s absolutely no public data to go off.

An example from our portfolio:

We were helping a founding team develop a proof of concept with a Fortune 10 client to check for bias in their computer vision models. There were publicly available data relevant for this use-case but because this dataset was publicly available, the client could have used it during training. Testing with data that was used in training is cheating.

It’s very difficult to prove that no test example was used during training and we can’t just take their word they didn’t. That would be a big asterisk at the end of the POC’s conclusions. The client understood this and wanted us to use our own sequestered data set for the tests. Such a dataset could cost hundreds of thousands to millions of dollars to acquire, making for an expensive project.

While AI offers great opportunities, it is not without its pitfalls — especially around customer expectations.

2. Customers expect ML systems to work like other business software

Machine learning is an experiment-driven field and until something is built, it’s hard to know precisely how well it will work. This creates a mismatch between customers’ expectations and the reality of ML products.

Customers are used to traditional software which begins with a specification and ends with a deliverable to meet the spec. Traditional software tends to fit into a binary state, where either it works or it doesn’t. For example, you don’t have a 90% probability that a website functions.

Machine learning products don’t operate in such a black and white world. There’s a spectrum of performance in ML that depends on the domain and the customer’s needs, which together define what is a commercially acceptable level of performance.

Other ways ML products differ from traditional software that customers may not expect:

- Initial data collection can be quite expensive

- After getting the initial dataset, we might have to ask for more or better data to continue training the model. This cycle may repeat multiple times

- A production system’s performance can deteriorate over time. ML systems require regular monitoring for things like data drift to maintain desirable performance

- Biases in the system can be difficult to detect

- It’s not always crystal clear why the system gave a particular output

3. The unique risks of AI: Ongoing monitoring, bias, distributed ownership, and more

ML systems offer tremendous upside for making work smarter and taking care of routine tasks and freeing humans to focus on higher level problems. However there are real risks with ML systems that aren’t present with traditional software, most of which fall under governance.

Who is responsible for an ML system?

AI governance is often not formalized across different organizations. This presents problems down the line after the system is put into production and questions arise.

For example, a common scenario we’ve encountered when supporting portfolio companies with potential clients is that their ML systems are a mess and straining under the weight of their technical debt. Often it may be the case that the engineer who built the original system is no longer with the company anymore, and documentation they provided (if any) is spotty. No one knows what’s happening inside the model and they don’t dare change anything.

Even worse, the model may be biased and creating problems for the company—say it’s a system for determining the credit-worthiness of loan applicants. Why are certain applicants getting approved for mortgages and others are not? Why is the model biased? Without visibility into the model, we can’t tell if it’s being ethical or not. And we certainly can’t explain it either. The whole system becomes a black box, which becomes very problematic.

Distributed ownership adds another layer of complexity to this hypothetical scenario. Who is responsible for the model’s bias? Is it the data engineer who managed data acquisition? Is it the ML engineer who split the data and trained the model?

This is not a problem of team size or resourcing; these scenarios are happening all the time at large conglomerates, global financial services companies and ecommerce giants alike.

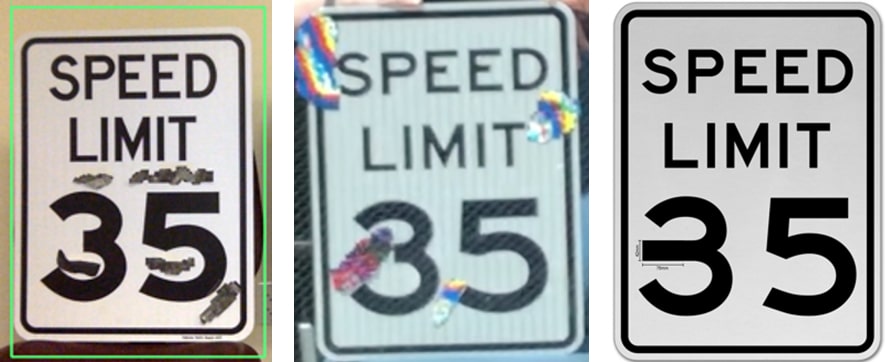

35-MPH signs that McAfee researchers altered with stickers. These signs fooled an autonomous vehicle system to read them as 45 MPH (left) and 85 MPH (center and right)

Another risk is in the form of adversarial AI attacks. This is where malicious actors attempt to fool models with deceptive data, thereby breaking the model. In a well-known example of this, researchers tricked an assisted driving system into seeing a stop sign as a 35 MPH sign simply by adding a small square to the sign. Slightly altering the “3” on a 35 MPH sign fooled the system into reading it as an 85 MPH sign.

On an AI team, who knows the model well enough to monitor it for both unintentional bias and drift, as well as malicious alterations? With these kinds of vulnerabilities in ML systems, it’s essential to have clear lines of responsibility to monitor for these types of attacks.

4. Teams need to stay focused on the business problem they are seeking to solve

A common point in failed AI projects we have seen is a lack of focus on the business problem.

For many years, a fairly consistent ratio of 1 out of 10 startups founded actually succeeded. Most startups fail because they don’t have product-market fit. The same is true with AI projects. If the project isn’t focused on a validated business need, then it’s not going to work.

Often, this happens when us technical people get excited about a shiny new technology and jump into coding too early without understanding the business context. This is especially dangerous for very early startups, where you have limited time and runway and need to focus on the things that really matter.

If you start with the code first and not a fully validated market need, then you may end up with a solution in search of a problem. This is usually a waste of time and resources. You may be able to refactor your code later to fit the business problem, but even then, it’s a longer route to a usable solution.

The AI architecture and experiments and proof of concept all have to be developed with the business context in mind. This can be tricky for tech folks with an academic background because in academia—where I started—you do a lot of experiments and if they work well, you write a paper. If they don’t, then you move onto the next thing. But in industry, you can’t just move on because experiments didn’t work. You have to examine the business problem and try again from another angle.

In 100 words or less…

When it comes to leveraging AI for good, the best is yet to come. There is practically unlimited potential for AI to deliver value, but there are several potholes and roadblocks on the road to get there. If you can get good quality data, manage customers’ expectations and educate them on the realities of AI systems, mitigate against risks unique to AI, and build your AI solution around a business problem with a vetted market need, then you’re well on your way.

Related Insights

The USB Moment for AI: Understanding Agent Protocols

Voice-first future: AI voice technology for startup founders and tech leaders

What’s next in AI for 2025?

What’s next in GenAI?

Navigating AI Transformation: Insights from Venture Studio and Corporate Partnerships