Editor’s note: A version of this article originally appeared on Machine Yearning.

How Technology Changes Societies ????

“First to AI supremacy?” Not so fast. When it comes to artificial intelligence, it doesn’t matter who’s first because it’s not a product in the way most pundits understand it.

“AI is the new electricity,” but electricity isn’t a product. Being first to have electricity doesn’t matter without the infrastructure to deliver it, the human capital to manage and improve upon it, and the standards to commercialize it. Benjamin Franklin famously conducted his kite experiment in 1752, and Thomas Edison patented the lightbulb in 1879, but it wasn’t until the 1920s that even half of American homes had electricity.

Like electricity, AI is a general-purpose technology – one with vast potential for nearly all sectors of the global economy. Talking about “AI supremacy” as if it were a zero-sum game is a fundamental misunderstanding of both how AI works and where AI power comes from. If institutions truly want to harness AI for large-scale transformations, then we need to create environments suitable for innovating upon it. We need to cultivate our societies into “innovation gardens,” instead of planting our flag on the moon and never going back.

A Tale of Two Theories

Before we get into it, let’s discuss the source of this misconception, so we know what to avoid when discussing AI’s potential.

There are two competing frameworks for understanding technological advancement in societies:

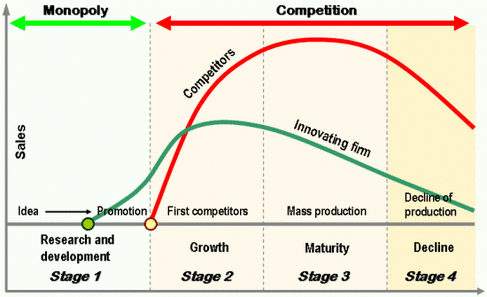

- The standard view is the leading sector theory of technological advancement, which emphasizes the first-mover advantages in fast-growing industries (aka leading sectors). The impact is felt rather immediately and is concentrated in a key sector. In international political economy, a single nation-state first monopolizes initial gains (sometimes called an “innovation monopoly”), then the industry spreads to other competing powers.

- An alternative theory, proposed by researcher Jeffrey Ding, a postdoctoral fellow at @StanfordCISAC and @StanfordHAI, is the diffusion theory of general purpose technologies. This theory highlights longer, drawn-out trajectories of incremental improvements upon general technologies which diffuse into broad sectors of the global economy overtime. The impact comes later and is more dispersed.

High-profile products like smartphones and ridesharing fit neatly into the first framework. They have immediate use cases, benefit from network effects, and have winner-take-all dynamics.

After all, If you’re buying an iPhone, that’s the only phone you’re going to buy for the next 2 years. If you take an Uber, that’s one fewer trip out of your 5 daily trips going towards a taxi or Lyft. These are zero-sum games, for the most part, which fit into the product life cycle framework taught in most business school curricula.

The product life cycle curve shows a general expectation of sales trends for new products. First movers who invent the product achieve monopoly profits in the early stages of customer adoption, maintain wide margins against the nearest competitor in the growth stage, but wane as the market matures and declines.

Artificial intelligence does not share these characteristics of leading sector products:

- Like electricity, AI is a general purpose technology that when first introduced did not have a singular commercial application.

- The open-source nature of most AI research means that state-of-the-art performance is not restricted to first movers; I can visit HuggingFace and deploy a GPT-like model for some web app in under an hour.

- It is highly pervasive, meaning it has applications for many sectors of the economy, rather than concentrating on a single sector.

A summary of these distinguishing factors is laid out below.

| Mechanisms of Technological Change and Power Transitions | |||||

| Mechanism | Impact timeframe | Phase of relative advantage | Breadth of growth | Institutional complements | Examples |

| Leading Sector Product Cycles | Early stage | Monopoly on innovation | Concentrated | Deepen skill base in LS innovations | Gunpowder, smartphones, ridesharing |

| General Purpose Technology Diffusion | Late stage | Edge in diffusion | Dispersed | Widen skill base in spreading GPTs | Ironworking, electricity, synthetic dyes, deep learning, quantum computing |

Source: 1/19/2022 HAI Weekly Seminar with Jeffrey Ding

Being First Isn’t Enough

Suffice to say, it isn’t enough to just be first to invent a new general purpose technology. That alone does not guarantee a competitive moat. Instead of focusing on building innovation monopolies from one-off products, Ding suggests societies that see the greatest impact from technology diffusion are those which intentionally cultivate environments suitable for innovation.

These environments:

- Continuously improve state-of-the-art benchmarks (via patents, research, and academic papers)

- Upgrade human capital with formalized disciplines (e.g. machine learning engineering, AI product managers)

- Introduce and evangelize standards for development and production (e.g. MLOps)

- Inspire innovation chains of complementary technologies across broad sectors of the economy

It’s a more holistic and nuanced approach vs. the innovation-centric framework for leading sector technologies, which is more popular with pundits.

“AI is the New Electricity” ⚡️

Andrew Ng famously coined the phrase “AI is the new electricity,” claiming it will “transform every industry and create huge economic value.” If, like electricity, AI adoption follows a diffusion theory trajectory, what practical investments are required for institutions and countries to cultivate leading innovation gardens?

I would argue for a cohesion of 5 focus areas of public-private partnerships:

- Clear Research and Development Goals

- Synergistic Infrastructure

- Human Capital Upgrades

- Commercial Standardization

- Responsible AI

1) Clear Research and Development Goals

Clear research and development objectives at the national level signal strategic interests which wouldn’t emerge from the commercial sector on their own, especially if they challenge a business’s cash cow. Typically, these objectives should align with a long-term vision or as a defense against mid-long term challenges, and set the proper incentives for development across the other 4 pillars.

For instance, an institution particularly sensitive to climate change might consider setting strategic R&D goals in quantum computing for climate and weather forecasting. Quantum systems have the potential to look much further into the future to forecast changes to complex systems – imagine predicting hurricane paths, landfalls, and flooding levels over a year in advance.

Another institution with relatively low computing power might set strategic R&D goals in more efficient models and hardware, such as brain-inspired AI. Most neural networks today use dense activation, where all neurons are activated to pass information through each layer, while the brain uses sparse activation, where only a few at a time are activated. To compare, the energy required to power the world’s fastest supercomputer would be enough to power a skyscraper, while the human brain can perform the same computation with the power to charge a lightbulb.

Though these basic research lanes aren’t likely to result in commercial offerings on a VC time scale, that isn’t the primary goal. The goal is creating the conditions for a quickly compounding chain of follow-on innovations.

2) Synergistic Infrastructure

Institutions should focus on building and supporting synergistic frameworks between open-source hardware, software, and cloud infrastructure.

If the past two years have taught us anything, it’s the fragility of the global silicon supply chain. Industry-wide over-reliance on just a few general-purpose chip manufacturers is a systemic risk for the global economy, with inventories under siege from players in nearly every industry.

Investing in antifragility for the hardware supply chain would be a good long-term bet, meaning serious consideration of novel computing methods like neuromorphic computing or even quantum computing architectures, which are far more efficient than von Neumann architecture, is in order.

Open-source software libraries like Tensorflow and PyTorch, as well as platforms like HuggingFace, are speeding up AI application development time. Investing in these and other platforms for standardization across other AI paradigms should reap similar rewards.

Finally, development of national research clouds, as France, Japan, and China have done, should provide an antifragile alternative to incumbent cloud computing platforms, especially for basic research which may not have immediate revenue opportunities.

3) Human Capital Upgrades

As international competition for top researchers heats up, America is in particularly dire need of an upgrade in human capital.

American universities still attract the best international talent, and enjoy healthy ecosystems promoting basic AI research. But the rest of the US population suffers from incredibly low literacy rates on fundamental AI concepts. By some estimates, fewer than half of US high schools teach any computer science at all. Of those that do, the curricula have remained more or less unchanged for 15 years.

Upskilling startups like FourthBrain, Workera, and Deeplearning.AI are delivering practical and highly relevant skillsets needed for learners to pivot into a career in AI. Factored is curating a deep bench of contractable AI/ML experts for multiple industries. Finally, in primary education, Kira Learning is designing a contemporary AI fundamentals curriculum for K-12 American students in all 50 states, the first of its kind.

4) Commercial Standardization

Like Agile software processes which formalized mechanical and software engineering workflows, MLOps is standardizing ML engineering in the workplace. Through MLOps, engineers and product managers are learning to tackle development of AI products in virtuous closed loops rather than linear progressions. Startups like WhyLabs are formalizing these practices, making model development a core business process at many institutions, instead of a data science side project.

5) Responsible AI

Much like the concerns around dangerous electricity inspired regulation and safety practices, we should anticipate a need for explainable AI (XAI) and the ability to audit model decision-making frameworks. This is especially relevant for “black box” models in mission-critical applications, such as loan applications or the criminal justice system, where hidden biases may have drastic and immediate impacts on humans’ well-being. Responsible AI startups are helping to guarantee safe and transparent model deployment, addressing concerns about AI adoption in these critical sectors.

???? In 100 words or less…

Institutional AI supremacy will not be the result of one-off killer products, but a concerted, holistic series of investments in resource infrastructure, human capital upgrades, and standards-setting to create environments that nurture and encourage innovation. These innovation gardens do not exist de facto for any one system of governance, but are deliberate in their construction… without them, any first mover advantages will quickly wane, and the world’s primary AI innovation center may converge elsewhere.

Related Insights

The USB Moment for AI: Understanding Agent Protocols

Voice-first future: AI voice technology for startup founders and tech leaders

What’s next in AI for 2025?

What’s next in GenAI?

Navigating AI Transformation: Insights from Venture Studio and Corporate Partnerships